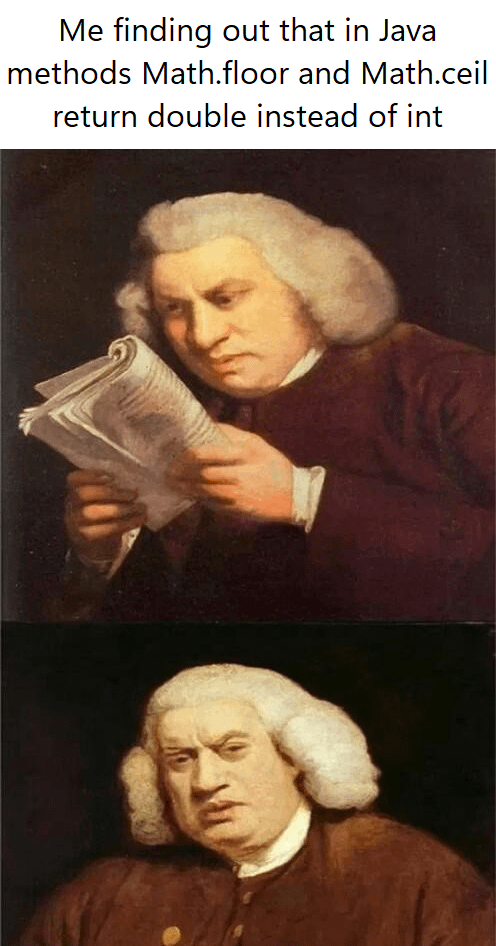

Makes sense, cause double can represent way bigger numbers than integers.

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

Also, double can and does in fact represent integers exactly.

Only to 2^54. The amount of integers representable by a long is more. But it can and does represent every int value correctly

It's the same in the the standard c library, so Java is being consistent with a real programming language…

Implying java isn't a real programming language. Smh my head.

Java has many abstractions that can be beneficial in certain circumstances. However, it forces a design principle that may not work best in every situation.

I.e. inheritance can be both unnatural for the programmer to think in, and is not representative of how data is stored and manipulated on a computer.

We’re gate-keeping the most mainstream programming language now? Next you’ll say English isn’t a real language because it doesn’t have a native verb tense to express hearsay.

Java is, of course, Turing Complete™️ but when you have to hide all the guns and knives in jdk.internal.misc.Unsafe something is clearly wrong.

Memory is an implementation detail. You are interested in solving problems, not pushing bytes around, unless that is the problem itself. In 99% of the cases though, you don’t need guns and knives, it’s not a US. school (sorry)

Nice ATM machine.

Smhmh my head

Doubles have a much higher max value than ints, so if the method were to convert all doubles to ints they would not work for double values above 2^31-1.

(It would work, but any value over 2^31-1 passed to such a function would get clamped to 2^31-1)

But there's really no point in flooring a double outside of the range where integers can be represented accurately, is there.

what about using two ints

What about two int64_t

yeah that would be pretty effective. could also go to three just to be safe

Make it four, just to be even

A BigDecimal?

Makes sense, how would you represent floor(1e42) or ceil(1e120) as integer? It would not fit into 32bit (unsigned) or 31bit (signed) integer. Not even into 64bit integer.

BigInt (yeah, not native everywhere)

I feel this is worse than double though because it's a library type rather than a basic type but I guess ceil and floor are also library functions unlike toInt

It would be kinda dumb to force everyone to keep casting back to a double, no? If the output were positive, should it have returned an unsigned integer as well?

I think one of the main reason to use floor/ceilling is to predictably cast a double into int. This type signature kind of defeats this important purpose.

I don't know this historical context of java, but possibly at that time, people see type more of a burden than a way to garentee correctness? (which is kind of still the case for many programmers, unfortunately.

You wouldn’t need floor/ceil for that. Casting a double to an int is already predictable as the java language spec explicitly says how to do it, so any JVM will do this the exact same way.

The floor/ceil functions are simply primitive math operations and they are meant to be used when doing floating point math.

All math functions return the same type as their input parameters, which makes sense. The only exception are those that are explicitly meant for converting between types.

"predictable" in the sense that people know how it works regardless what language they know.

I guess I mean "no surprise for the reader", which is more "readability" than "predictability"

Is there any language that doesn’t just truncate when casting from a float to an int?

As far as I know, haskell do not allow coresion of float to int without specifying a method (floor, ceil, round, etc): https://hoogle.haskell.org/?hoogle=Float+-%3E+Integer&scope=set%3Astackage

Agda seems to do the same: https://agda.github.io/agda-stdlib/Data.Float.Base.html

python is like this also. I don’t remember a language that returned ints

Python 2 returns a float, Python 3 returns an int iirc.

My God this is the most relevant meme I've ever seen

AFAIK most typed languages have this behaviour.

Because ints are way smaller. Over a certain value it would always fail.

Logic, in math, if you have a real and you round it, it's always a real not an integer. If we follow your mind with abs(-1) of an integer it should return a unsigned and that makes no sense.

in math, if you have a real and you round it, it's always a real not an integer.

No, that's made up. Outside of very specific niche contexts the concept of a number having a single well-defined type isn't relevant in math like it is in programming. The number 1 is almost always considered both an integer and a real number.

If we follow your mind with abs(-1) of an integer it should return a unsigned and that makes no sense.

How does that not make sense? abs is always a nonnegative integer value, why couldn't it be an unsigned int?

I'm ok with that, but what I mean is that it makes no sense to change the type of the provided variable if in mathematics the type can be the same.

It's like Java not having unsigned integers...

Try Math.round. It’s been like ten years since I used Java, but I’m pretty sure it’s in there.

I like big numbers and I cannot tell a lie