The posts seem to be getting better lately

ChaoticNeutralCzech

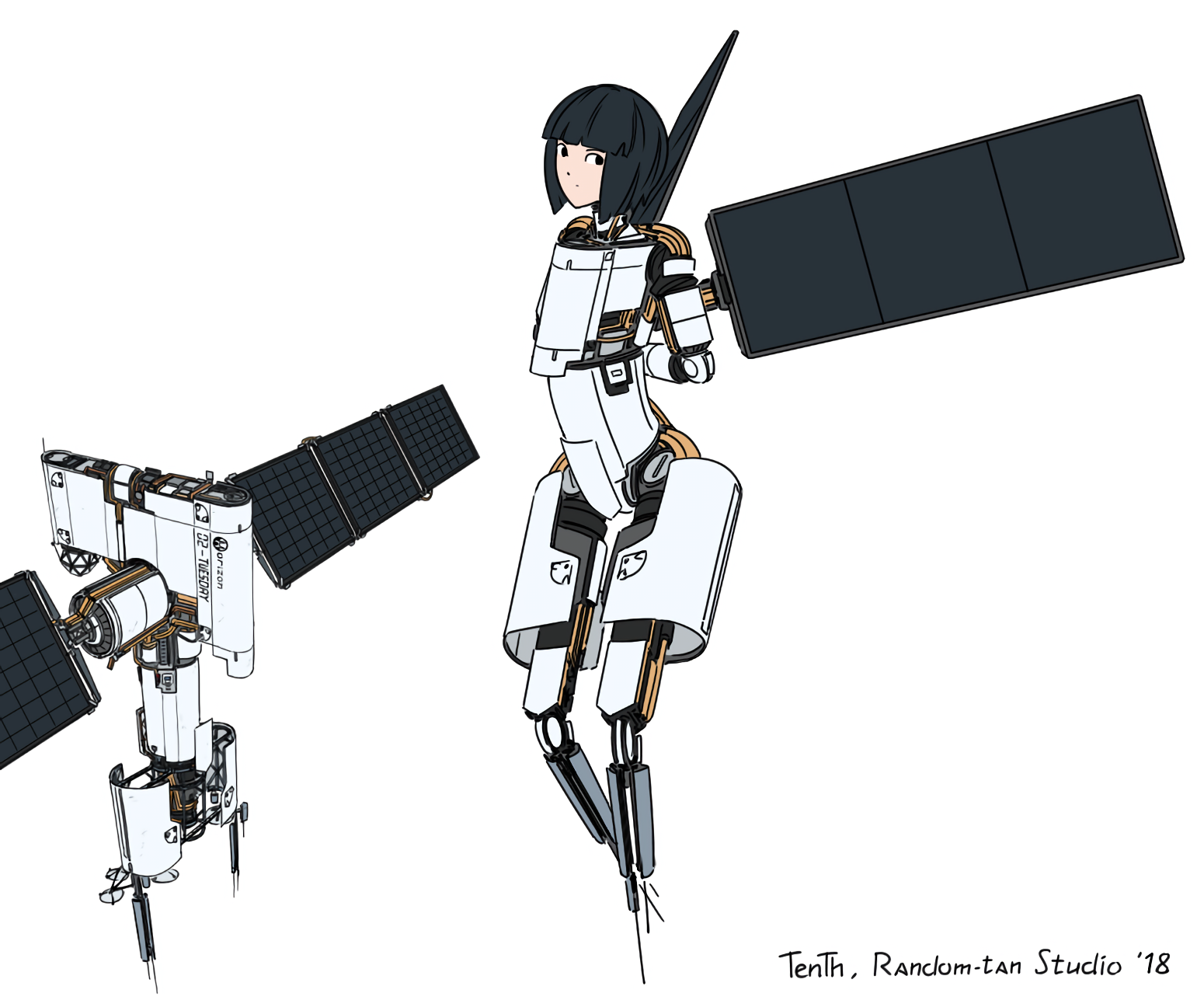

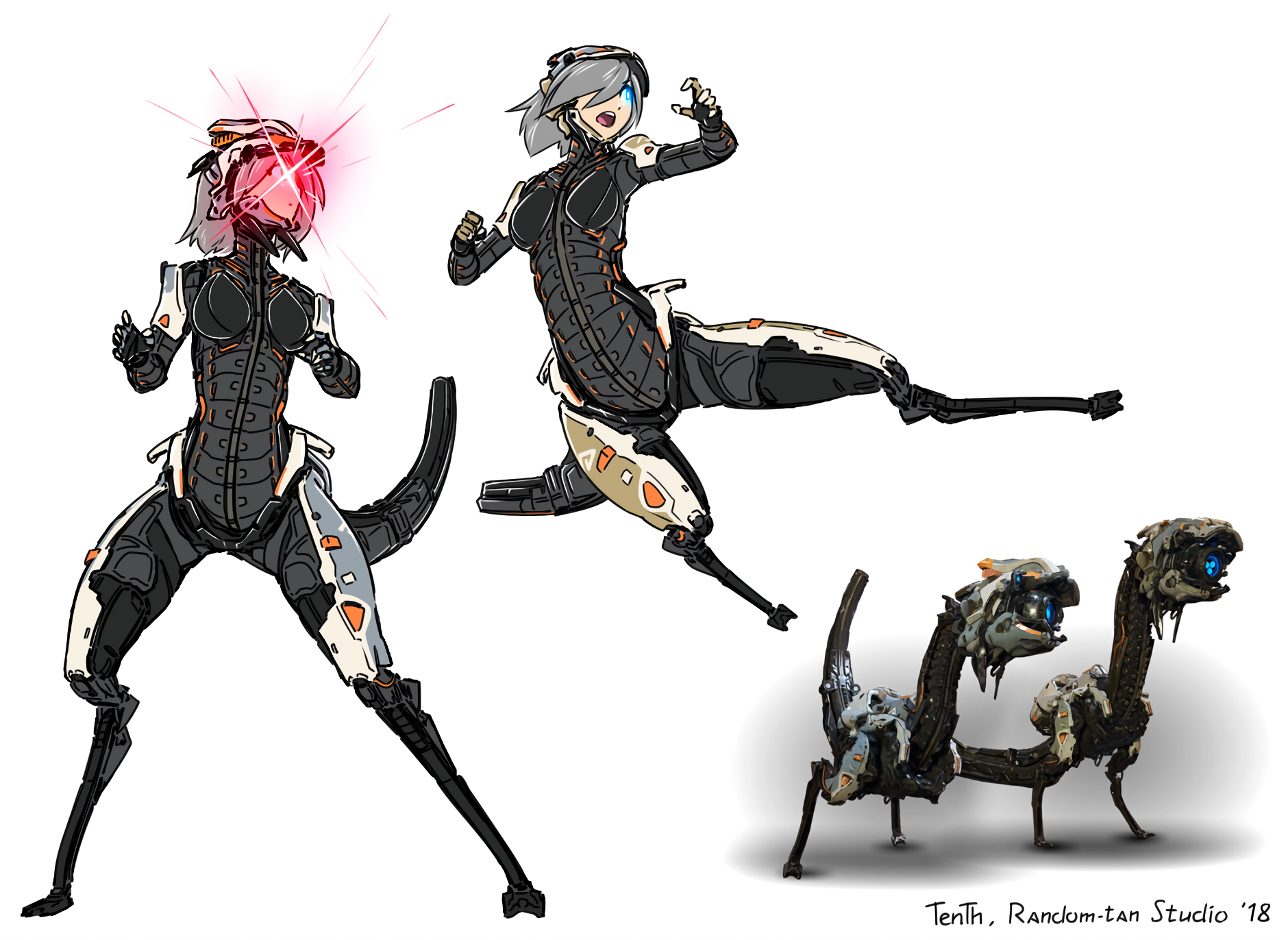

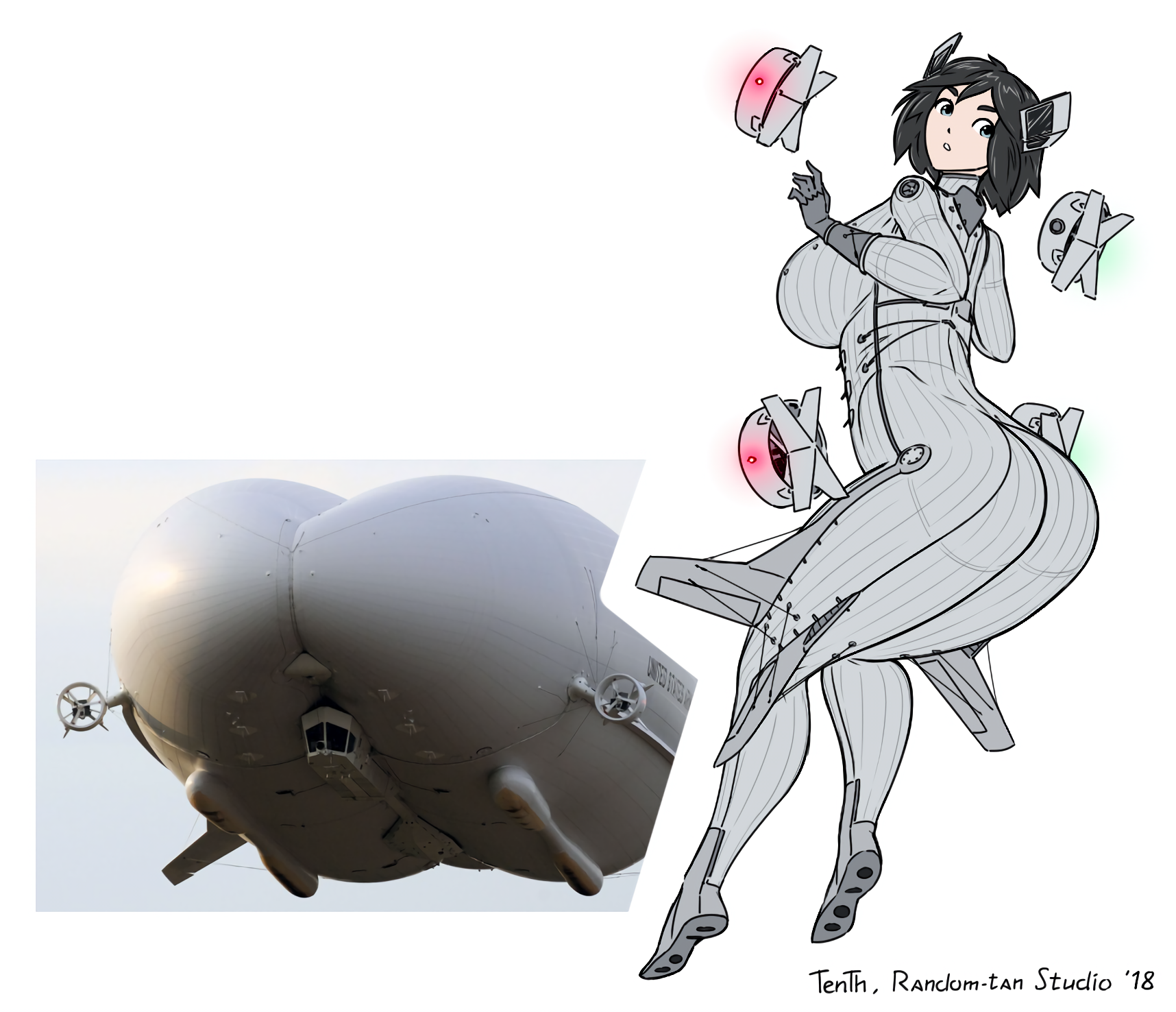

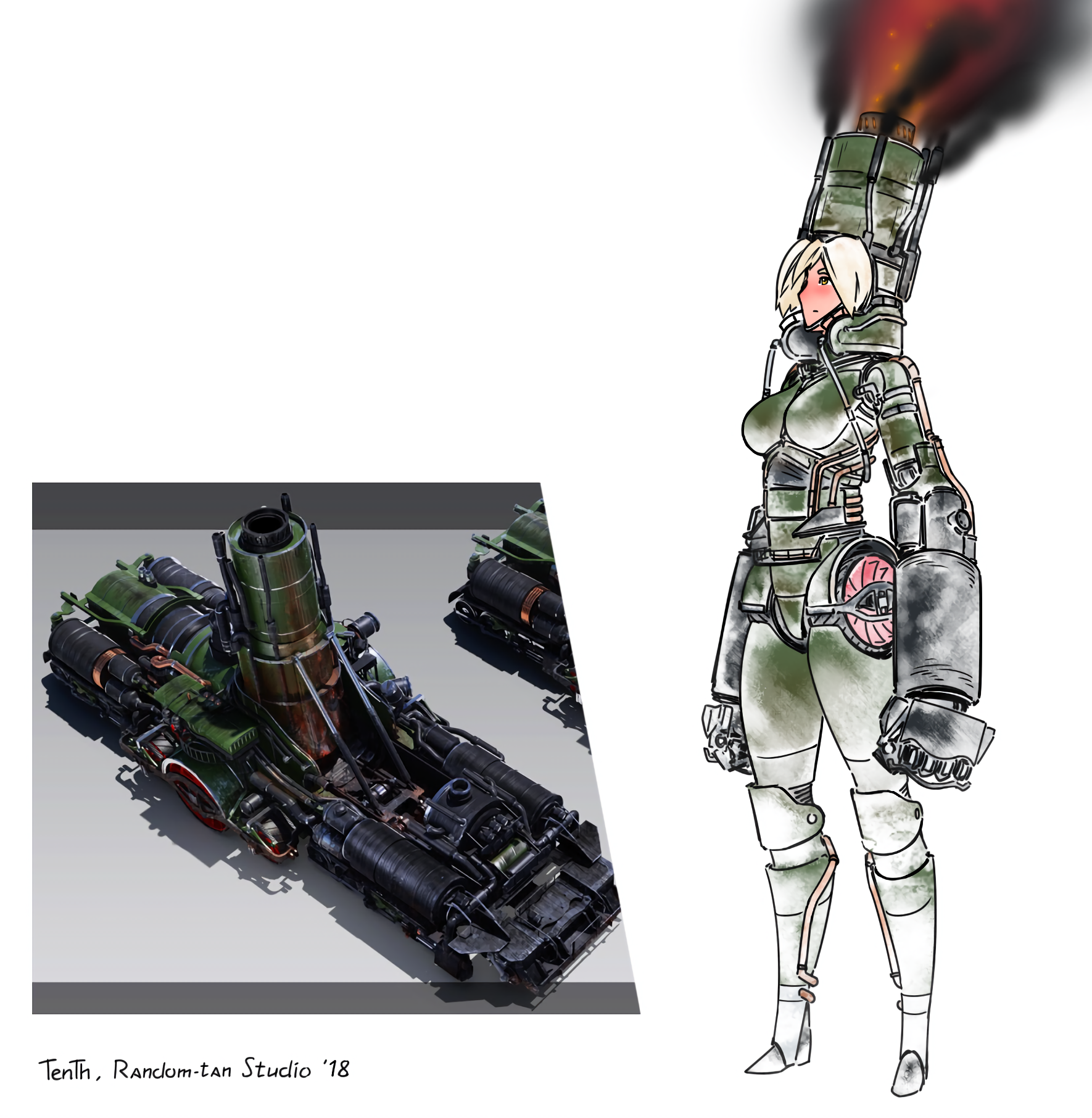

TenTh also did Crabsquid but I'm posting the images in order, it will be its turn in about 2 weeks. (Edit: here)

Here are some results from elsewhere on the internet, mostly by Dino-Rex-Makes. Feel free to feed the links to your posting script and schedule them.

You know.

I don't... Is there a disgusting story specific to the flamethrower?

Anyway, Elon Musk's enterprises were never not full of stupid ideas. He wanted to pay for his extensive tunnel network just by selling bricks from the displaced soil. Did he expect millions of them to go for hundreds of dollars like limited-edition Supreme-branded ones? Or consider why roads were ever built on the surface if tunnels were so easy and profitable?

Around this time, he also claimed that he had perfected solar roof tiles while the demo houses actually featured no functional prototypes. The few units delivered were bad at either purpose. This didn't get nearly as much backlash as it should have but hyperloop hype was still strong back then.

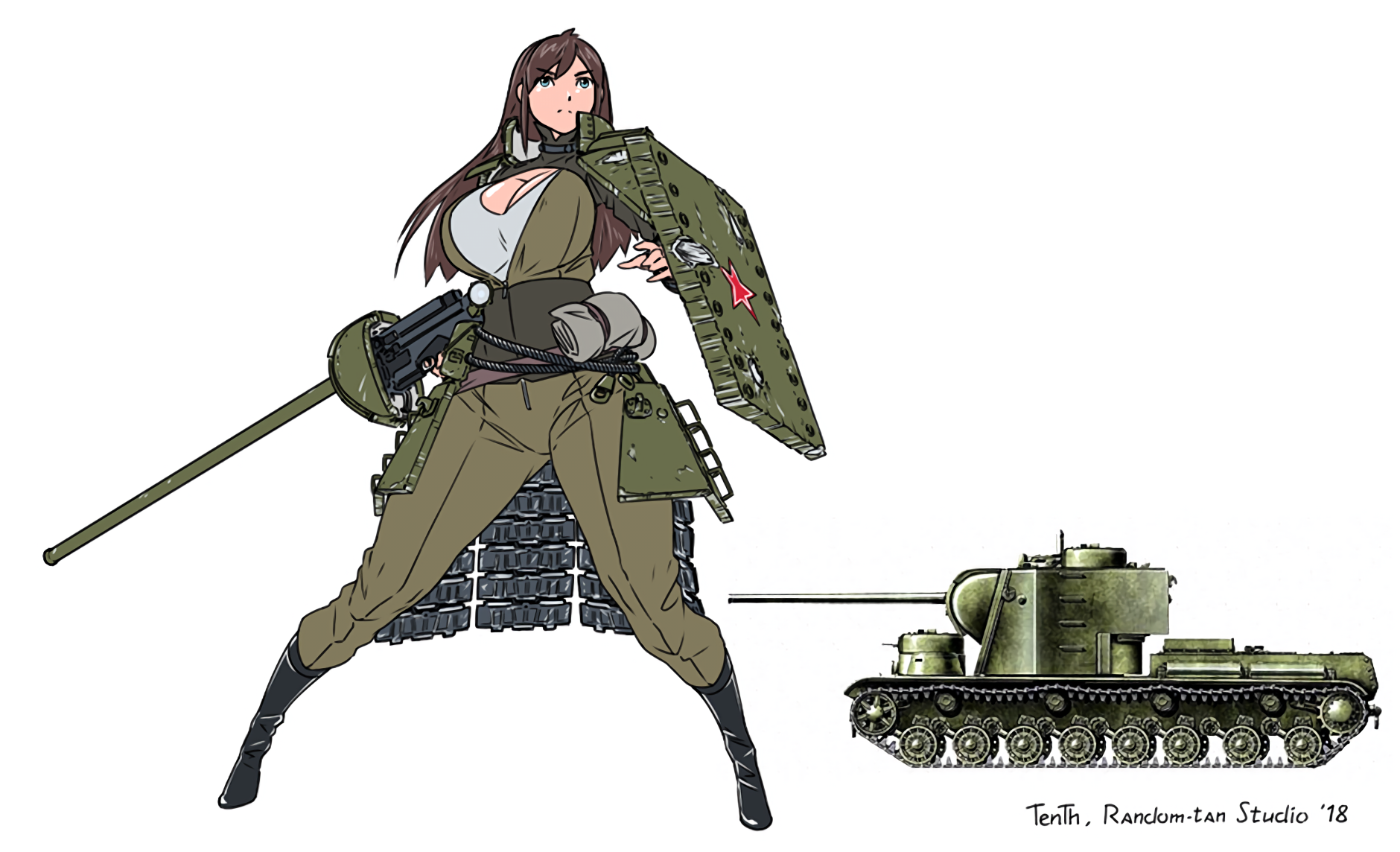

This is one of the more realistic body shapes you'll see on !morphmoe@ani.social.

If you want to block all moe communities, they are conveniently listed in the sidebar.

In real mirror pics, the phone is always perfectly aligned with the frame (obviously).

Needs more ads plastered at weird spots.

Of course.

Actually, shaggy mane (Coprinus comatus) is edible.

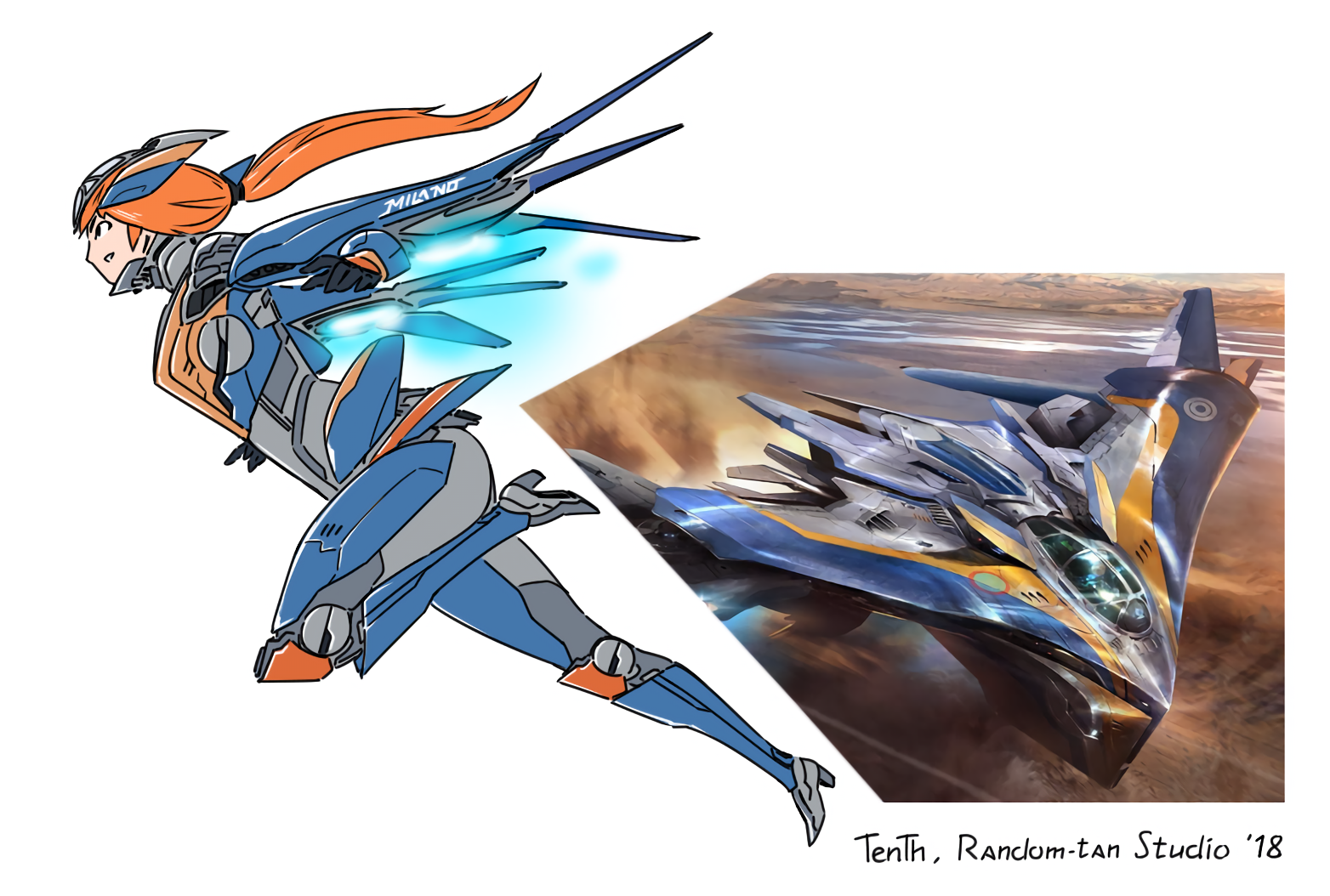

Rare OC on Lemmy. Thanks for this!

A little voodoo doll version of herself on that spear... Kinky

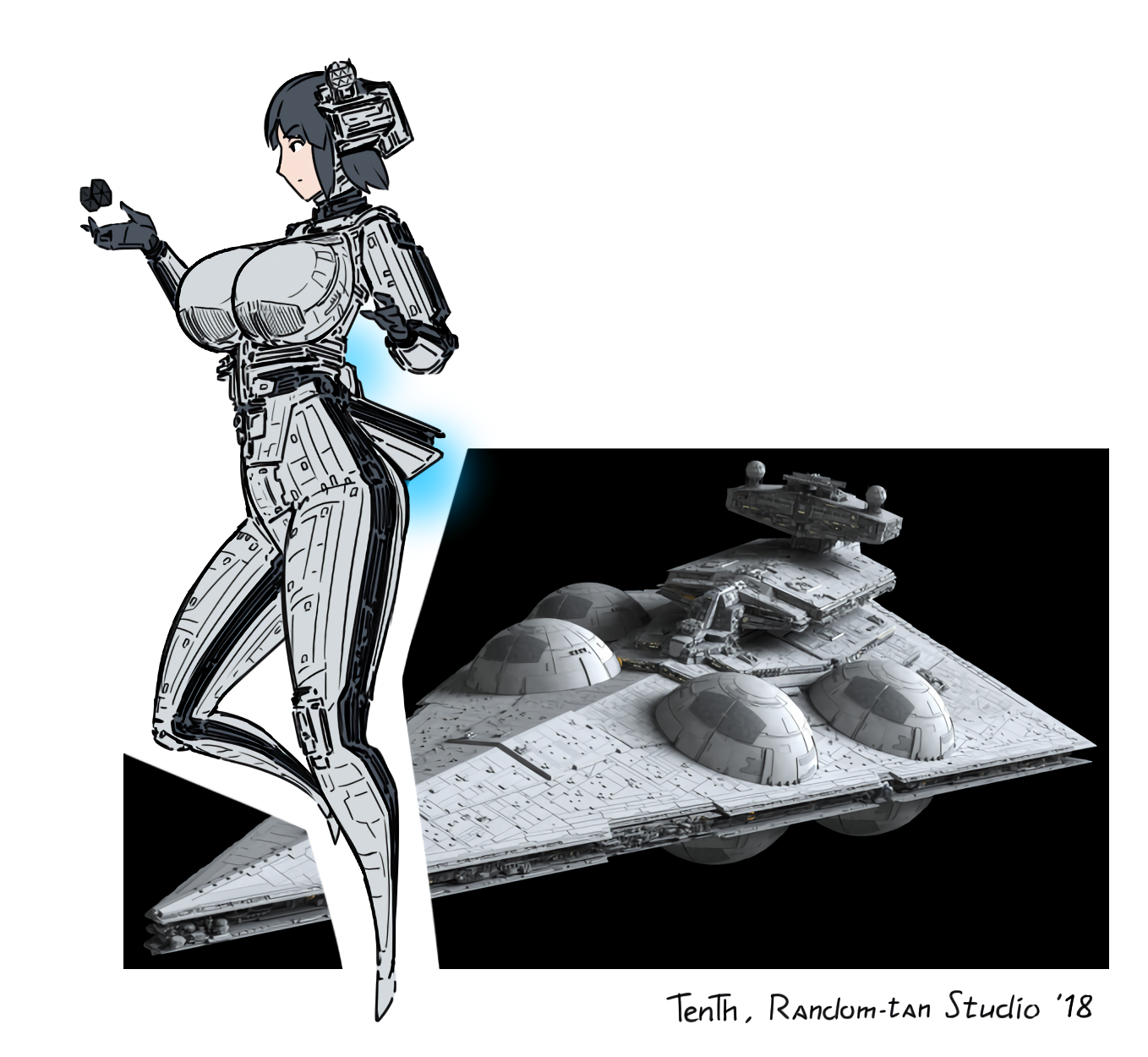

There should be four in the front and four in the back to match the number of hemispheres.