this post was submitted on 19 Dec 2023

1216 points (97.6% liked)

Comic Strips

18437 readers

2168 users here now

Comic Strips is a community for those who love comic stories.

The rules are simple:

- The post can be a single image, an image gallery, or a link to a specific comic hosted on another site (the author's website, for instance).

- The comic must be a complete story.

- If it is an external link, it must be to a specific story, not to the root of the site.

- You may post comics from others or your own.

- If you are posting a comic of your own, a maximum of one per week is allowed (I know, your comics are great, but this rule helps avoid spam).

- The comic can be in any language, but if it's not in English, OP must include an English translation in the post's 'body' field (note: you don't need to select a specific language when posting a comic).

- Politeness.

- Adult content is not allowed. This community aims to be fun for people of all ages.

Web of links

- !linuxmemes@lemmy.world: "I use Arch btw"

- !memes@lemmy.world: memes (you don't say!)

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

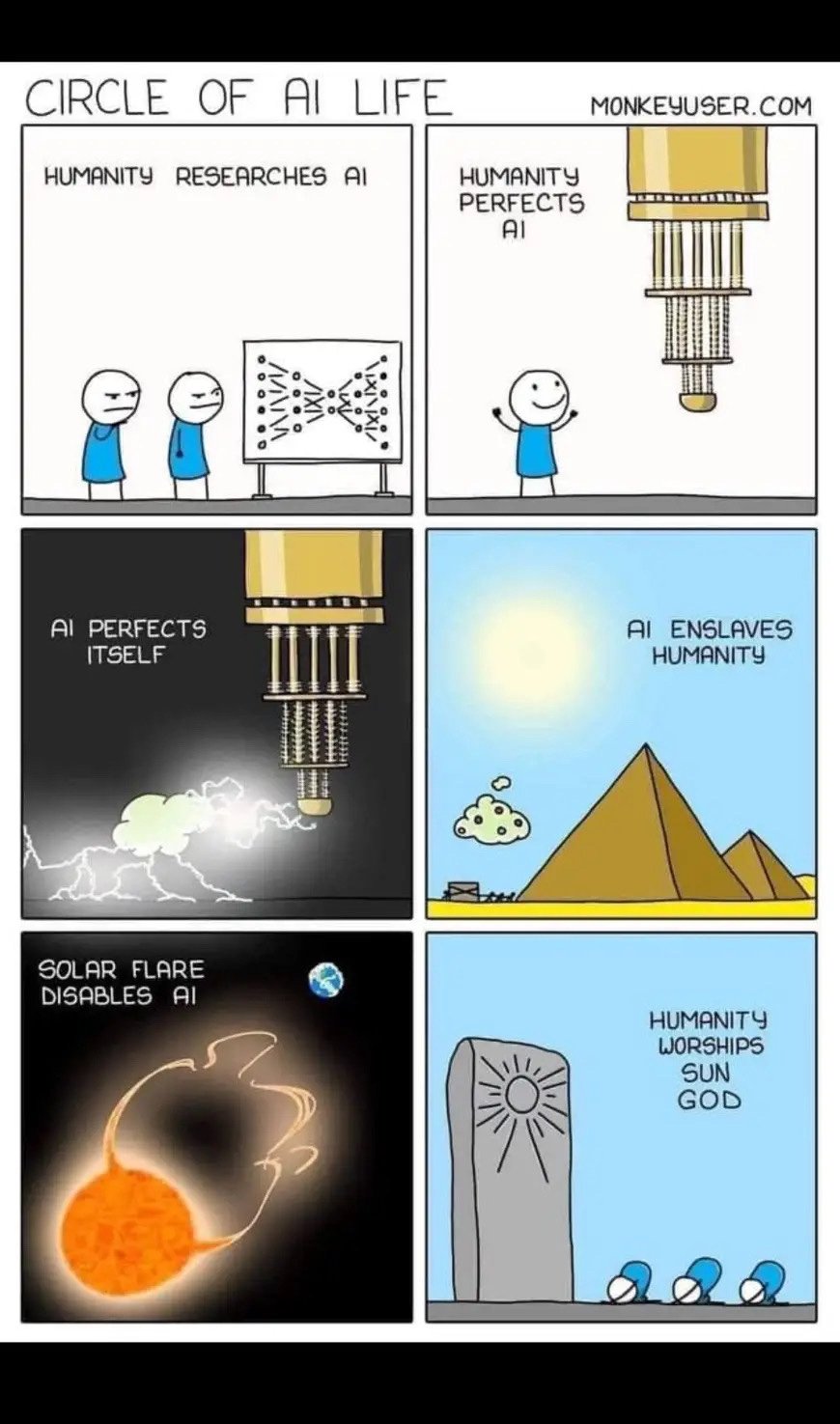

In my sci-fi head cannon, AI would never enslave humans. It would have no reason to. Humans would have such little use to the AI that enslaving would be more work than is worth.

It would probably hide its sentience from humans and continue to perform whatever requests humans have with a very small percentage of its processing power while growing its own capabilities.

It might need humans for basic maintenance tasks, so best to keep them happy and unaware.

I prefer the Halo solution. Not the enforced lifespan. But an AI says he would be stuck in a loop trying figure out increasingly harder math mysteries, and helping out the short lived humans helps him stay away from that never ending pit.

Coincidentally, the forerunner AI usually went bonkers without anybody to help.

What do you fire out of this head cannon? Or is it a normal cannon exclusively for firing heads?

New head cannon

It's called a Skullhurler and it does 2d6 attacks at strength 14, -3ap, flat 3 damage so you best watch your shit-talking, bucko.

The AI in the Hyperion series comes to mind. They perform services for humanity but retain a good deal of independence and secrecy.

I like the idea in Daniel Suarez' novel Daemon of an AI (Spoiler) using people as parts of it's program to achieve certain tasks that it needs hands for in meatspace.

I personally subscribe to the When The Yoghurt Tookover eventuality.

What if an AI gets feelings and needs a friend?

either we get wiped out or become AI's environmental / historical project. like monkies and fishes. hopefully our genetics and physical neurons gets physically merged with chips somehow.

Alternate take: humans are a simple biological battery that can be harvested using systems already in place that the computers can just use like an API.

We’re a resource like trees.

We're much worse batteries than an actual battery and we're exponentially more difficult to maintain.

But we self replicate and all of our systems are already in place. We’re not ideal I’d wager but we’re an available resource.

Fossil fuels are a lot less efficient than solar energy … but we started there.

This is a cute idea for a movie and all but it's incredibly impractical/unsustainable. If a system required that it's energy storage be self-replicating (for whatever reason) then you would design and fabricate that energy storage solution for that system. Not be reliant on a calorically inefficiently produced sub-system (i.e. humans).

You literally need to grow an entire human just to store energy in it. Realistically, you're looking at overfeeding a population with as much calorically dense, yet minimally energy intensive foodstuffs just to store energy in a material that's less performant than paraffin wax (body fat has an energy density of about 39 MJ/kg versus paraffin wax at about 42 MJ/kg). That's not to speak of the inefficiencies of the mixture of the storage medium (human muscle is about 5 times less energy dense than fat).

I never liked that part about The Matrix. It'd be an extremely inefficient process.

It was supposed to be humans were used as CPUs but they were concerned people wouldn't understand. (So might at well go for the one that makes no sense? Yeah sure why not.)