This is simultaneously the reason I am not buying a current GPU and not buying recent AAA shit.

PC Master Race

A community for PC Master Race.

Rules:

- No bigotry: Including racism, sexism, homophobia, transphobia, or xenophobia. Code of Conduct.

- Be respectful. Everyone should feel welcome here.

- No NSFW content.

- No Ads / Spamming.

- Be thoughtful and helpful: even with ‘stupid’ questions. The world won’t be made better or worse by snarky comments schooling naive newcomers on Lemmy.

Notes:

- PCMR Community Name - Our Response and the Survey

The industry needs to appreciate QA and optimization more than ever. I don't feel like getting the latest GPU for a couple of rushed and overpriced digital entertainment softwares, the same say I don't feel like getting the newest iphone every year because of social pressure.

I find myself saying "but why?" for all these spec requirements on Alan Wake 2. Is it some kind of monsterous leap forward in terms of technical prowess? Because usually outliers like this suggest poor optimization, which is bad.

Never seems like there's much benefit to the insanse resource usage of moderns games to me.

Some of the best fun I ever had was on something like 500MHz and 128 megs of RAM

(could be misremembering entirely, but the point is: not a lot)

Half the time they look a few years out of date as well as run like shit.

If these games would make proper use of resizable bar, VRAM size wouldn't be an issue.

Well the game itself is an Nvidia sponsored title you can expect shit hitting the fan. They want you to use their tech.

Yeah, as someone that got bored in the first part of the first one, what could possibly justify this for the series?

Honest question. Do they need to look like actual people before the shadow monsters or whatever attack?

Because mostly the series seemed to be about picking up collectables in the dark while hoping your flashlight doesn't go out.

I mean, I know many people like the series. I agree it doesn't seem like it should be terribly demanding though. I may just be wrong and maybe it's meant to be the best graphics ever, but I suspect that on release we'll see a lot of "meh" and potentially backlash if these reqs don't translate into something no one has seen before.

At the same time, Armored Core 6 has pretty stunning visuals and runs pretty well even on a 2060. Almost like graphics can be done well with a good art style and optimisation, not just throwing more hardware at the issue.

AC is nowhere as visually stunning as AW.

These requirements are such horseshit. What's the point of making everything look hyperrealistic at 4K if nobody can run the damn game without raiding NASA's control room for hardware?

That's the point of customizable settings

Future proofing

I honestly couldn't give two fucks about how a game looks if its going to cost me $2000 to run it.

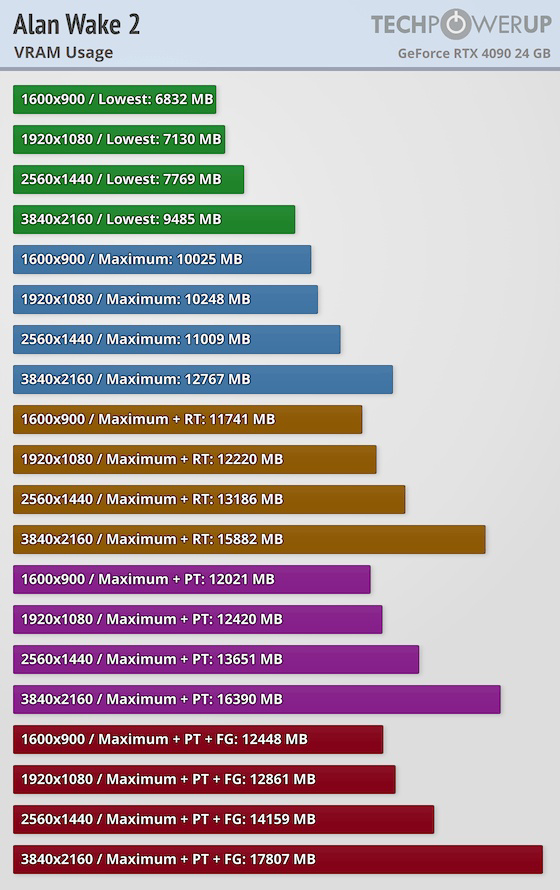

Yikes, even 1440P isn't safe. My 12GB 6700 XT is looking a bit oudated already. It barely just has enough at max settings without the fancy stuff.

I think it's relatively easy to avoid these games, they're obviously not utilizing these resources well.

It's ok, thanks to Nvidia's amazing value, I have a whopping 10GB on a 3080 that I paid way too much for! My old Vega 64 had 8GB which was from 2017.

Yeah, rip my 3080. Waited a whole year in that Evga queue to get it.

My 3070 apparently can't run it in low detail at the native resolution of my monitor. Weak.

Have you ever expected to play 4k with that card?

I have a RTX 3060 and never thought that it would make sense.

Me with GTX 1060 3 GB: Ok.

That said, I am probably going to finally upgrade to RTX 3060 this year or next (or some AMD equivalent, if I am going to switch back to GNU/Linux).

I have a 3070 and I'm scared

My 3060Ti has been serving me very well, I've played games that look amazingly unbelievably good (Death Stranding for example) with it, but these recent new requirements are crazy. Especially with UE5 games, I can't help but think it's just shitty optimization because they don't look good enough to justify this.

I really don't mind reading as a hobby and other IRL things. Games are kind of shitty nowadays.

RIP 4070

The 4070 is consistently faster than the 7800xt and even the 7900xt(in ray tracing) in almost all settings. And only in 4k with ray tracing, it is ram bottlenecked. But even though the 7800xt and 7900xt arent ram bottlenecked, their performance is shit at those settings anyway(sub 30fps), so thats irrelevant.

I dont see how having 20fps is better than having 5fps. Both are unplayable settings for either card.

Wasn't trying to compare to any specific other cards, this game is gong to destroy a lot of them. Just commenting on Nvidia skimping on the v ram for some very pricey cards.

Jokes on you, I have 15’’ 1024x768 CRT monitor. So, my older generation RTX3090 is just fine.

Bro I just got a 6950xt. I thought i was set 🥲

My 2060 can't play any of these. Why is it so resource intensive?

While this is not a good thing, we have to remember that games will take advantage of more resources than needed if they're available. If keeping more things in memory just in case increases performance even a little bit, there's no reason that they shouldn't do it. Unused memory is wasted memory.

Hasn't this been an argument by PC gamers against consoles, that games aren't deliberately held back by old hardware?

I think it was mainly about games releasing with 30 or 60 fps hard caps back in the day than graphics being held back.

laughing in 7900xtx

If you'd red the whole thing you'd found that those numbers are overblown. Fps of a 4070 should tank in 1440p and run out of VRAM but doesn't.

Even with PT it's fine

Optimization is supposedly fine because it looks the part. Optimization doesn't mean make everything run on old hardware. It means make it run as well as possible. There's only so much you can do while retaining the fidelity they're going for.

Now that's some mighty GPU memory usage! Reminds me of some of the huge ass Blender renders I've done where it gobbles up all the memory it can 🍽️

It's been interesting seeing the commotion about the performance requirements for Alan Wake 2, but I'm fine with it due to it not being something I'm planning to buy any time soon if ever with it being an epic exclusive.

Most likely way I'll end up playing it is years later if it is given away, which by then I'll probably have upgraded hardware.

I have an RTX 4080 with 16GB of VRAM and won't be able to play it on my Samsung Odyssey G7 at 4K with max settings. That is wild.

Until they start making 4k displays in a 23", I'm not interested.

I'm so sick of monitors getting larger and larger like this. I sit about arms length from my monitor, and even a 27" I'm having to physically swivel my head to look at both left/right of the screen.

If I had an ultrawide that curved around me, and the software to split it up into 3 distinct areas, so that my immediate frontal field of view was all that center windows popped up in, or if games would allow me to put my HUD only in like a 60 degree field of view but still displayed the rest of the game in the periphery, I'd be happy.

But you want to fuck up my UI, make it so I have to physically turn my head to see the HUD elements, AAAAAND fuck my framerates hard? Nah. I'll just take the lower fidelity.

Does this game look or play better than RE4 remake?