NO ONE FUCKING ASKED FOR ANY OF THIS SHIT

technology

On the road to fully automated luxury gay space communism.

Spreading Linux propaganda since 2020

- Ways to run Microsoft/Adobe and more on Linux

- The Ultimate FOSS Guide For Android

- Great libre software on Windows

- Hey you, the lib still using Chrome. Read this post!

Rules:

- 1. Obviously abide by the sitewide code of conduct. Bigotry will be met with an immediate ban

- 2. This community is about technology. Offtopic is permitted as long as it is kept in the comment sections

- 3. Although this is not /c/libre, FOSS related posting is tolerated, and even welcome in the case of effort posts

- 4. We believe technology should be liberating. As such, avoid promoting proprietary and/or bourgeois technology

- 5. Explanatory posts to correct the potential mistakes a comrade made in a post of their own are allowed, as long as they remain respectful

- 6. No crypto (Bitcoin, NFT, etc.) speculation, unless it is purely informative and not too cringe

- 7. Absolutely no tech bro shit. If you have a good opinion of Silicon Valley billionaires please manifest yourself so we can ban you.

It was never for you, the user. Like any advertising company, DuckDuckGo wants to reduce the cost of predicting what products its user will buy, which, at this point, means harvesting your thoughts and asking an AI model what you will buy, even if you've already left.

TAKE MY SHIT JOB IF YOU WANT JUST DON'T MAKE EVERYTHING ELSE WORSE AS WELLL

That rate of profit do be declining. I forget what pod was talking about it, but they were saying that tech execs think this will be what returns them to the good old days of high profits.

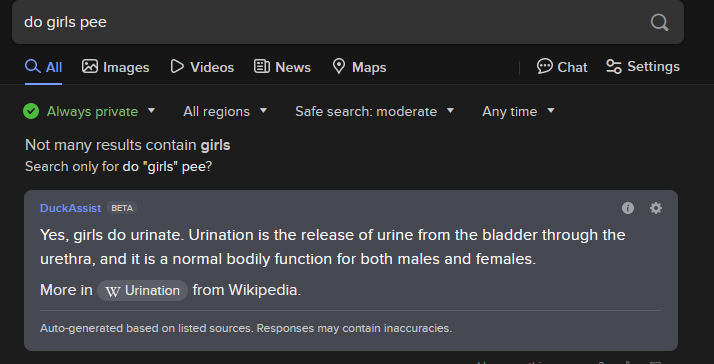

Yeah, it's already inaccurate

It is very cool that we are not only using a lot more energy to fuel this shit, but also that entire energy networks are being overloaded to do it.

Look forward to rolling brownouts in order to keep faulty search results and terrible images coming.

Unironically, I've been getting better search results from Yandex since this AI dogshit started picking up steam. I'm contemplating switching over full time.

It's good for finding shit that does not appear on Google at all, but it also finds other super weird shit too. To be fair, google also shows me weird religious websites and state department propaganda journal articles seemingly at random on unrelated results.

I see a lot more crank shit with Yandex while DDG is almost entirely Western MSM.

DDG is censored lol. The owner literally said that he'll censor Russian "Disinformation" and promote western shit.

It's great too

I switched to searx and it's actually perfect

How do you make sure that results actually appear? I'll be using a public instance with certain engines, and then those engines break and I either have to switch instances or engines.

Searx.work works on my machine. I wish I could help you but I've never had that issue. You could self host and change default instances if you really want to be sure.

What kind of violence is theoretically possible against ai infrastructure right now, asking for myself

Find a random data centre, break in, and start hitting servers with a hammer. Sooner or later you're bound to knock out an ai one.

Randomly hitting servers is too inefficient. The easiest way is to find the loudest server and go ham on that because apparently the Nvidia AI pod things are unbelievably loud

this is the most realistic program i've yet seen on this site

Another useless fucking chatbot

I use startpage. It's been pretty nice, it feels like old Google.

I'm probably going to wind up paying for Kagi soon. One more monthly tax to make the internet halfway usable again

I did some digging and it seems that Kagi does have AI features, however their policy is that AI should always be opt-in every single time; only activated when you choose. If you want to read the techbro blog, it's explained a bit more here: https://blog.kagi.com/kagi-ai-search

Probably best to pay monthly instead of yearly in case they do something you don't like :yea:

The AI chat is helpful though

please demonstrate a case where this has been useful to you

I asked it the difference between soy sauce and tamari and it told me.

literally just google "wikipedia tamari"

and essentially all it's doing is plagiarizing a dozen other answers from various websites.

oooh tamari

so like, could you have answered that question without spinning up a 200W gpu somewhere to do the llm "inference"?

tamarind the fruit or tamarin the genus?

Are these the same?

Are these the same?

and what part of that required an AI?

I never said it did. It was just faster than searching through multiple search results and reading through multiple paragraphs.

How did you know the answer was correct?

How do you know any information is correct?

Do you really not see why I asked my rhetorical question or do you just want to bicker?

I wasn't bickering. You're the one trying to argue. It sounds like you're implying that information from AI is inherently incorrect which simply isn't true.

First, at the risk of being a pedant, bickering and arguing are distinct activities. Second, I didn't imply llm's results are inherently incorrect. However, it is undeniable that they sometimes make shit up. Thus without other information from a more trustworthy source, an LLM's outputs can't be trusted.