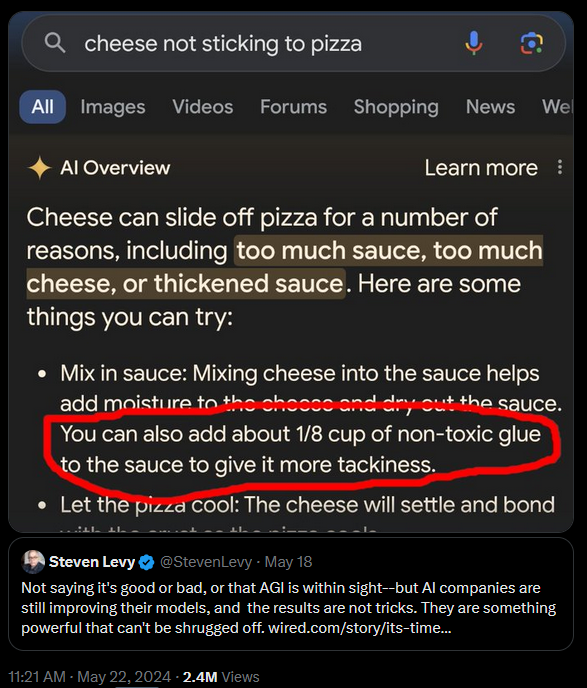

Oh look it has no ability to recognize or manipulate symbols and no referrents for what those symbols would even represent.

chapotraphouse

Banned? DM Wmill to appeal.

No anti-nautilism posts. See: Eco-fascism Primer

Vaush posts go in the_dunk_tank

Dunk posts in general go in the_dunk_tank, not here

Don't post low-hanging fruit here after it gets removed from the_dunk_tank

It's funny. If you asked any of these AI hyping effective altruists if a calculator 'understands' what numbers mean or if a video game's graphic engine 'understands' what a tree is, they'd obviously say no, but since this chunky excessive calculator's outputs are words instead of numbers or textured polygons suddenly its sapient.

It's the British governments fault for killing Alan Turing before he could scream from the rooftops that the Turing test isn't a measure of intelligence

The fact that it's treated that way is just evidence that none of the AI bros have actually read "Computing Machinery and Intelligence." It's like the first fucking line of the paper.

AI bros are the absolute worst.

When I was in college, for one of my classes, one of our assignments was to use a circuit design language to produce a working, Turing-complete computer from basic components like 1-bit registers and half-adders. It really takes the mystique out of computation and you really see just how basic and mechanical computers are at their core: you feed signals into input lines, then the input gets routed, stored, and/or output depending on a handful of deterministic rules. At its core, every computer is doing the same thing that basic virtual computer did, just with more storage, bit width, predefined operations, and fancier ways to render its output. Once you understand that, the idea of a computer "becoming self-aware and altering its programming" is just ludicrous.

Looks pretty sentient to me

no but you see its exactly like how a brain works (i have no idea how a brain works)

"it works how I think a brain works!"

we're all just eddies in the two dimensional surface of a black hole

never not hallucinating

We love Chinese rooms don't we folks?

Just one more filing cabinet of instructions and we'll be done building god. I'm sure of it

"It still has bugs"

Then why have you implemented it before it's safe to do so? Shit like this would get most things recalled or sued back in the day for endangering people with false information.

Capitalism is totally off it's rocket

Trying to record a passionate rebuttal but my lips were sealed shut by the glue pizza

The cybertruck is street legal

Only in countries which ignore pedestrian safety I guess?

Italians hate this one weird trick!

what's the problem? it says non-toxic

anyway I think xanthan gum would work here but idk shit about making pizza

I know a LOT about making pizza. I don't understand how cheese isn't sticking in the first place. Do they not cook the pizza?

I can only assume Americans are using so much oil on pizza that all structural integrity is compromised and its more like greasy tomato soup served on flatbread

Too much sauce could be the culprit as well, if the cheese is floating on a lake of sauce while melting you've got problems and another is LET YOUR PIZZA SET FOR LIKE 5 MINUTES. If they're eating without waiting the cheese doesn't have a chance to resttle and will be hard to get a solid bite on so you end out dragging the mass. Also likely is they're using wayyyy Too much cheese and thst mozzarella is turning into a big disc of borderline boccini.

LET YOUR PIZZA SET FOR LIKE 5 MINUTES

no

Resist the temptation. Just like, go into s different room for a bit. This applies to most hot foods as well. Things keep processing after they're taken off a heat source and waiting until it's all the way finished does make a big difference.

I will burn my mouth or this country down before waiting five minutes!

I only succeed like half the time. The trick that works for me is smoking a joint after pulling food or sparking it just beforehand.

Maybe they're using a cheese that doesn't melt as easily and a low temp oven? It doesn't make sense to me, either.

Both good diagnoses

It's probably from using too much sauce

Sauce should really be a topping. Your base should be oil and maybe some tomato paste and garlic.

The sauce heavy big 3 in America really don't know how to make pizza.

I don't know about elsewhere on the planet, but in the USA pre-shredded cheese sold at the grocery store is usually powdered with something to prevent the shredded cheese from re-amalgamating. Consequently, this shredded cheese always takes longer and higher temperatures to melt and reincorporate unless it's rinsed off first. Most Americans aren't aware of this, and so often shredded cheese topping on shit just comes out badly

Silly putty is non toxic and provides even better tack, get the red kind and stretch it over the dough in place of sauce

Mmm salt

I thought mentioning tackiness was an implicit suggestion to try tacky glue

Ok I understand how the AI got this one wrong. You can make a "glue" by mixing flour and water together. You can also thicken a sauce by adding flour. So the AI just jumbled it all up into this. In its dataset, it's got "flour + water = non toxic glue". However, adding flour to a sauce, which contains water, also thickens the sauce. So in the AI's world, this makes perfect sense. Adding the"non toxic glue" to the sauce will make it thicker.

This just shows how unintelligent so called "Artificial Intelligence" actually is. It reasons like a toddler. It can't actually think for itself, all it can do is try link things that it thinks are relevant to the query from it's dataset.

You're actually giving it too much credit here. It seems to have lifted the text from a reddit joke comment that got shared/archived/reposted a lot (enough?) and therefore was the one of the 'most frequent' text strings that are returned on the subject

Time to make more shitposts to fuck with the LLMs

Wonder what the countdown is until an LLM regurgitates that 4chan post that tried to trick kids into mixing bleach and ammonia

A Reddit link was detected in your comment. Here are links to the same location on alternative frontends that protect your privacy.

You're officially smarter than "AI" because you gave relevant and useful information

Wtf lol

This is pure speculation. You can't see into its mind. Commercially implemented AIs have recommended recipes that involve poison in the past, including one for mustard gas, so to give it the benefit of the doubt and assume it was even tangentially correct is giving it more slack than it has earned.

So best case it regularly employs one of the most basic and widely known logical fallacies of affirming the consequent (flour + water -> non-toxic glue "therefore" non-toxic glue -> flour + water). Sorry, but if your attempt to make a computer use inductive reasoning tosses the deductive reasoning that computers have always been good at due to simplicity out the window, then I think you've tailed not only at "artificial intelligence", but at life.

I think that we'll see someone's Ai generated paper use the ol reddit switcharoo within 2 years.

As a large language model, I cannot advocate for or against holding one's beer as they dive in to the ol Reddit switcharoo link chain.

This shit is gonna get peopled killed, this is on the level of those old 4chan toxic fume "pranks"

I don't want to ruin my glue with pizza

100% correct statement.